Last Updated on January 8, 2025 by Steven W. Giovinco

Introduction

Generative AI tools like ChatGPT and Gemini have revolutionized information creation and sharing but also fuel the rapid spread of misinformation, harming reputations and disproportionately affecting marginalized groups.

Solutions Overview

This blog explores a dual approach to addressing these issues: leveraging Online Reputation Management (ORM) to manage public-facing narratives and integrating human feedback mechanisms to refine GenAI Large Language Models (LLMs).

By combining these strategies, individuals, brands, organizations and community groups can mitigate misinformation, protect reputations, and build trust in an increasingly AI-driven world.

Blog Source

This blog post is derived from the comprehensive whitepaper “GenAI LLM Misinformation: Comprehensive Strategies to Safeguard Digital Narratives and Build Trust” by Steven W. Giovinco, which is pending publication.

Key Causes of AI Misinformation

Hallucinated Content: AI generates seemingly plausible but false information, often hard to spot and misleading due to its authoritative appearance.

Automated Amplification by Bots: Bots flood platforms with misleading posts, manipulate algorithms, and create the illusion of widespread support for false narratives.

Coherent but False Narratives: AI crafts entire, seemingly credible but fabricated stories, aligning with biases or flawed training data, influencing opinions and decisions.

Importance of Addressing False GenAI Information

AI-generated misinformation is pervasive and fast-moving. With the ability to create and disseminate content at an unprecedented speed, fake information can quickly spiral out of control, often outpacing efforts to correct it. Understanding how this happens is key.

- Fast Spread: Misinformation outpaces corrections, distorting perceptions.

- Key Sources: Hallucinations, bots, false narratives, and deep fakes.

- Amplifiers: Bias, context gaps, and algorithm manipulation worsen impacts.

- Result: Trust erodes; proactive solutions are critical.

Examples of GenAI Misinformation

- Financial Sector: In 2024, an AI-generated video falsely claimed that Tesla had acquired Ford Motor Company.

- Education Sector: A Denver school administrator outlines six concerns about AI, including biased algorithms, unintended consequences, and the ability of AI systems to “hallucinate” or fabricate information.

- Politics: A 2022 deepfake of Ukrainian President Zelenskyy falsely urging soldiers to surrender aired briefly before being debunked (BBC News, 2022).

These and many more examples illustrate the urgent need to address misinformation.

5 Effective Solutions: Online Reputation Management (ORM)

Online reputation management is a cornerstone strategy for combating negative items appearing in Google search results.

But it also plays an important role in combating generative AI misinformation.

Key reasons are because ChatGPT and Gemini sometimes pull live searches from Google and Bing, and LLMs were trained, in part, with information sourced online.

Here are five key ORM approaches:

Step 1: Develop Strategy: Identify misinformation, assess scope, understand the audience, and plan content to correct inaccuracies.

Step 2: Build Presence: Update profiles, websites, and create engaging content across relevant platforms to strengthen visibility and trust.

Step 3: Publish Quality Content: Focus on creating blogs, videos, case studies, and infographics to address falsehoods and demonstrate expertise. This is a time consuming but key step.

Step 4: Engage Relationships: Interact authentically with followers and influencers to build trust and amplify credibility.

Step 5: Monitor and Evolve: Track results, adapt strategies, and produce fresh content to stay ahead of misinformation trends. Google and this technology is currently in constant flux.

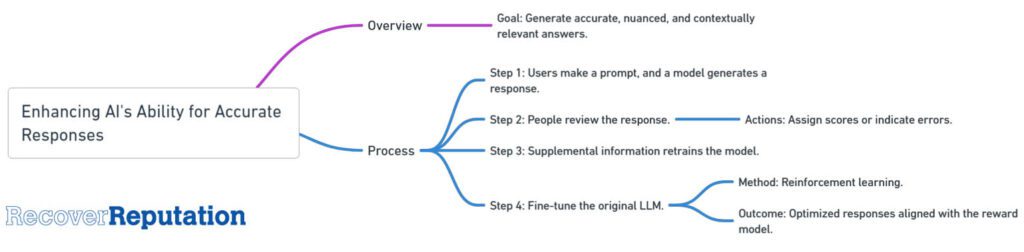

Enhancing GenAI Models Using Human Feedback

Improving AI accuracy requires refining the systems or large language models that generate misinformation. These five strategies are essential:

Step 1: Target weaknesses: Address inaccuracies, biases, and gaps in AI responses. Generally, these should match the terms corrected in online reputation management above.

Step 2: Curate data: Research and gather improved information, or datasets, from expert-reviewed sources.

Step 3: Incorporate feedback: Refine outputs using human expertise for accuracy and inclusivity on ChatGPT, Gemini, Perplexity, etc.

Step 4: Monitor and audit: Continuously test, log errors, and ensure ethical compliance.

Step 5: Adapt proactively: Stay updated with trends and user feedback for improvement.

Case Study: ORM and GenAI Strategies for Misinformation Management

Key Points:

- Challenge: A sustainable energy group was targeted by false online claims about its leadership, leading to six negative search results across five countries and no presence on the LLM Gemini.

- ORM Strategies: Developed optimized content, updated platforms, and engaged influencers to enhance credibility and suppress misinformation.

- GenAI Feedback: Identified gaps in LLM responses, implemented human review, and continuously adjusted outputs for improved visibility.

- Outcome: Achieved 100% suppression of negative search results and created a positive Gemini presence within six months, with a 25% increase in positive mentions online.

Actions and Solutions

In 2023, a sustainable energy group faced reputational harm due to false claims about its leadership, threatening its ability to secure funding. A strategic combination of online reputation management and Generative AI adjustments addressed the challenge.

High-quality content creation, SEO optimization, and updates to platforms like Wikipedia and Gemini were key components.

Additionally, gaps in LLM responses were identified and refined using human feedback.

Within six months, the group eliminated negative content from search results, established a positive online presence, and restored stakeholder trust, demonstrating the effectiveness of a targeted ORM and GenAI approach.

Timeline for ORM and GenAI Repair: A Six-Month Plan

ORM and GenAI repair typically spans six months and approximately 200 hours. The process involves:

- Month 1: Develop a strategy by auditing reputation, identifying issues, and setting up monitoring tools while starting content creation.

- Month 2: Launch optimized websites, publish blogs and posts, and provide feedback to GenAI platforms to refine outputs.

- Month 3: Enhance visibility through additional content, link-building, and AI output refinement while suppressing negative links.

- Months 4-5: Intensify content creation, engage communities, and monitor outcomes to strengthen positive narratives.

- Month 6: Consolidate efforts by publishing new content, maintaining AI corrections, and implementing a long-term management plan.

This structured approach effectively suppresses misinformation, builds credibility, and establishes a resilient online presence.

Ethical Generative AI: Summary and Key Points

Generative AI, when used ethically and accurately, can restore trust, empower underrepresented groups, and foster global collaboration.

However, as AI advances, challenges like misinformation require proactive strategies, including advanced detection systems, multi-modal analysis, and continuous learning.

Policy frameworks, public education, and incentives for ethical AI development are critical to balancing innovation with accountability. By addressing these issues, generative AI can spotlight global challenges, drive meaningful collaborations, and empower communities to reclaim their narratives.

Key Points:

- Ethical generative AI builds trust, equity, and collaboration across sectors.

- Challenges include combating misinformation through detection, analysis, and oversight.

- Policies promoting transparency, accountability, and public education are essential.

- AI can address global issues like inequality and climate change while fostering community empowerment.

Bottom Line

The fight against AI-driven misinformation isn’t just a technical challenge—it’s a societal imperative. By adopting proactive ORM strategies, refining AI systems, and engaging diverse communities, generative AI Large Language models such as ChatGPT from a source of risk into a tool for trust and equity. This whitepaper serves as a roadmap for stakeholders to reclaim digital narratives, ensuring that AI empowers rather than erodes. The time to act is now.

Learn More About Combating AI Misinformation

This blog post is derived from the comprehensive whitepaper “GenAI LLM Misinformation: Comprehensive Strategies to Safeguard Digital Narratives and Build Trust” by Steven W. Giovinco. The whitepaper delves deeply into managing Generative AI misinformation, offering real-world examples, actionable solutions, and advanced strategies like Online Reputation Management (ORM) and refining AI systems through human feedback.

Topics covered include:

- The societal impacts of AI misinformation.

- Practical ORM and SEO techniques for reputation management.

- Advanced methods to improve AI accuracy, such as Reinforcement Learning with Human Feedback (RLHF).

- Real-world case studies showcasing effective solutions.

- Policy frameworks and broader implications of ethical AI.

Related posts:

- Recover Reputation Introduces Groundbreaking AI Reputation Management Service [Press Release]

- Get to the Point: What CEOs Need to Know About Online Reputation Management in 2024

- The Willy Wonka Debacle: A Cautionary Tale of AI Misuse and Reputation Damage

- What is Generative AI Reputation Management and Why It’s Important?